Perttu Korhonen

Good analysis requires new discoveries, creativity, even luck. But innovation is not just a matter of chance — it favours those who are ready for it, which in this case means having the right data. Utilising micro-data to answer new and different questions is a good start, but the next step is to link such item-level information from various sources together. That way we can create analytical opportunities beyond the sum of the parts. In this post I show how a unique linked dataset on the UK housing market reveals that buy-to-let buyers secure a greater discount from the asking price than other buyers.

On November 4 2012 the Financial Times claimed that more than half of outstanding mortgages in London, South East England and South West England were interest only. The number surprised many in the Bank, including my boss. So a group of analysts given 24 hours to try to work out what percentage of mortgages in those areas were interest only.

The challenge was that none of the regulatory or statistical forms provided the data. Twenty or so different cuts of the UK banks’ mortgage data had been made available. Breakdowns by repayment type and geography were shown on them separately. But a question combining the two had not been considered.

When the joint distribution is unknown, the answer must be based on the available marginal distributions. Lack of data must be compensated for by other evidence, and expert judgement.

In the end, three different regulatory and statistical forms, plus the Moody’s report underlying the FT article were used to assess the claim. In the absence of sufficiently disaggregated data, we could not give a precise figure, and could therefore only say the number in the article appeared “broadly factually correct.”

Depth and precision

If we had had a database of every outstanding UK mortgage, capturing a set of standard attributes about the product, the mortgaged property and the borrower, the question could have been answered within minutes. A set of micro-data can hold many answers, in contrast to forms that often are able to answer only the specific questions they were designed for.

Central banks increasingly have access to such item-level information. The recent FPC policy on limits on high-LTI lending was fully reliant on the FCA’s Product Sales Data (PSD) loan-level collection. The PSD was started in 2005 in order to monitor retail conduct risk. This summer, the PSD will be expanded to capture the stock of outstanding mortgages in addition to new sales. If the same question about interest only mortgages crops up again, we will now be able to answer it quickly, and precisely.

Alone, the PSD will still not capture buy-to-let lending. However, while the attempts to mix and match different collections of aggregated data left too much to guesswork, sets of micro-data really can lend value to each other. For instance, the PSD alongside another suitable dataset could start revealing trends in the buy-to-let lending market.

With help from the Bank’s new Data Lab, I’ve been able to link together the PSD and other sets of housing micro information. Land Registry’s Price Paid data captures transactions by individual house buyers and is freely available under the Open Government Licence. But more recently, a variant of the database has also been published to identify whether a transaction was paid for in cash or financed with a mortgage.

By linking PSD mortgages to the associated records in the Price Paid data, I’ve been able to discern when a buy-to-let mortgage has been originated. Where the Price Paid data indicates a charge on the property, and a suitable loan cannot be found from the PSD, the transactions should predominantly be using a buy-to-let loan. The remainder should be mainly made of cash transactions. Below I explain more on how I’ve gone about matching the data.

Weighing probabilities

When two related datasets do not share common identifiers, linking must be based on other common information. But this can be challenging.

For example, both Price Paid data and PSD include the postcode of the property. But dozens of properties can share a postcode.

Similarly the price paid on a property should be near – but is not necessarily the same as – the lending bank’s valuation of it. And the mortgage account is generally opened some days before completion of the sale.

Often a mortgage can be paired with more than one candidate transaction. Should the loan be linked to a transaction where there is just one day difference in the dates but a £50,000 difference between the price and the valuation? Or would a better match be one with a six day difference in the dates, but the valuation is equal to the price paid? What about one which has a two day and £10,000 difference, but for which the property types do not accord?

Probabilistic record linkage techniques are a solution to the problem of choosing between alternative pairs. Let’s take neighbourhood in which we know 5% of properties are detached and 95% are flats. If the two sources agree that a property is a detached house, more weight should be given to the pair on that attribute than if they agreed that a property is a flat.

Such techniques can even take into account the possibility that any of the source sets’ attributes are erroneous, and still use all available evidence. For example, if only one of the sources claims that the property is a detached house, probabilistically, less faith should be put in that pair on that feature. But after considering the rest of the available information, the pair can still be determined as a link.

Having completed the Land Registry – PSD match, I then applied a similar approach to bring in comprehensive information on online listings of properties for sale and rent.

A wealth of insight

The resulting dataset can be used to establish how many properties are coming on the market and sell or fail to sell. Changes in the asking prices and properties’ time on the market can be observed and used to assess housing market conditions.

The role of funding becomes clearer. It can be determined whether the buyer is an owner-occupier with a mortgage, or an investor – and if certain buyers are driving the market. The combined dataset also sheds light on how banks’ property valuations match the purchase prices.

Likewise it can be seen if a recently purchased property is subsequently being marketed for rent. The rental yield sought by these new buy-to-let owners can be compared to lettings of existing landlords.And all of this can be broken down by region or type of property.

Not only does the combined dataset allow more precise analysis, it also enables new types of analysis. Getting closer to the decision making of individual agents can provide invaluable insight to feed into agent based models. Some of the statistical trickery relevant for small datasets can be replaced with machine learned models.

Case: The London boom of 2013-14

Some sample visualisations of the dataset can shed light on recent developments in the housing market.

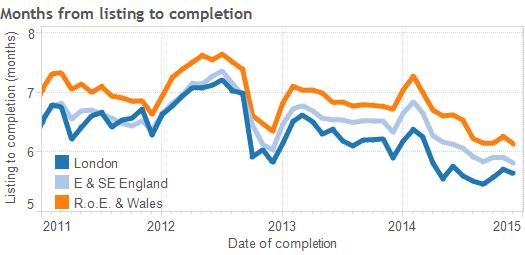

As an indication of increased buyer activity, average time from start of marketing a property to completion of a sale declined over the course of 2013 and 2014, with London leading the pack.

Data: WhenFresh (Zoopla listings), Land Registry Price Paid, ONS Postcode Directory

In periods when buyers are quick to snap up the new property coming on the market, sellers are less likely to need to reduce their asking price during listing.

Data: WhenFresh (Zoopla listings), Land Registry Price Paid, ONS Postcode Directory

While the buyers in 2011-12 still managed to negotiate 4-5% off the last asking price, buyers increasingly often paid the full asking price. In London the average discount even turned negative, as in many cases the accepted offer was higher than the seller’s quote.

Data: WhenFresh (Zoopla listings), Land Registry Price Paid, ONS Postcode Directory

After splitting the data by buyer type, it appears that first-time buyers consistently achieve the smallest discount from the asking price. In London, just before the market started to normalise in July 2014, first-time buyers ended up paying almost 2% on top of the asking price.

Data: WhenFresh (Zoopla listings), Land Registry Price Paid, Land Registry Cash/Mortgage data, FCA Product Sales Data on mortgages, ONS Postcode Directory

Looking at the London area in April-September 2014 further confirms that home owners (first-time buyers and home movers) put in a higher bids than investors (cash and buy-to-let buyers) in most boroughs. Notably, healthy discounts were available for buyers in Camden, Westminster, and Kensington and Chelsea, the former London hotspot.

Average discount from asking price by Local Authority in the London area, completions in Q2-Q3 2014

Data: WhenFresh (Zoopla listings), Land Registry Price Paid, Land Registry Cash/Mortgage data, FCA Product Sales Data on mortgages, ONS Postcode Directory

The bottom line

Traditionally, data were collected with particular questions in mind. But the problem with such narrowly defined datasets is that no data governance can foresee the needs of future analytical discovery. Linked micro-data can create the environment that maximises the chances of new findings.

As Louis Pasteur once said, “chance favours the prepared mind”.

This article was written whilst Perttu was working in the Bank’s Banking and Insurance Analysis Division

Bank Underground is a blog for Bank of England staff to share views that challenge – or support – prevailing policy orthodoxies. The views expressed here are those of the authors, and are not necessarily those of the Bank of England, or its policy committees.

If you want to get in touch, please email us at bankunderground@bankofengland.co.uk